Sep 25, 2019 | Kelly Cotton, Patrick Adelman, Sharath KoorathotaSpecial thanks to Pupil Labs. This project was originally presented at the NYC Media Lab Summit 2019 - and won second prize!Watch the video here.

See the poster for the project here.

Over a billion hours of video is watched each day on YouTube alone. Add in Vimeo, Netflix, Hulu, and other online video services and the amount of digital video content that is being produced and consumed is staggering. With all this content, how can we know what people actually pay attention to? Traditional measures of “engagement” on YouTube include things like shares, likes, minutes watched, etc.

These methods aren't ideal because they don’t take into account what people are actually doing while they’re watching the video. How is their heart rate changing? Are they sweating more? How big are their pupils? By looking at these unconscious measures of interest, we can better understand what people like to watch – and know what they’ll want to watch next.

Project Fovea aims to integrate eye-tracking and deep learning into the creative process. We created a video production pipeline that uses real-time eye-tracking information to model the viewers attentional behavior and seamlessly predict what will maintain their level of interest. The pipeline has three main steps: 1) training a model to detect attention based on eye tracking measures, 2) pre-processing video data, and 3) collecting viewing data. We put all of this together to predict the video that will most engage the viewer based on their personal viewing behavior.

Step 1 - The Model

The first question to address was what happens to people's eyes when they're interested in what they're watching? We had half of the puzzle: publicly available datasets, from The DIEM Project and Actions in the Eye. Now we needed a way to comb through this data and learn something from it.

Enter: deep learning

Because video frames are presented sequentially, we utilized a long short-term memory (LSTM) model on these datasets to estimate attention during video-viewing. During the training process, the model used fixation metrics such as fixation position and duration to predict future pupil diameter. We used a standard implementation for the regression LSTM: minimization of mean squared error, non-linear activation in between layers, dropout and bi-directionality.

In short, the model inputs were fixation-related information (positional, durational and pupil diameters during fixations) and the output was a prediction of the pupil diameter for the next 5 seconds.

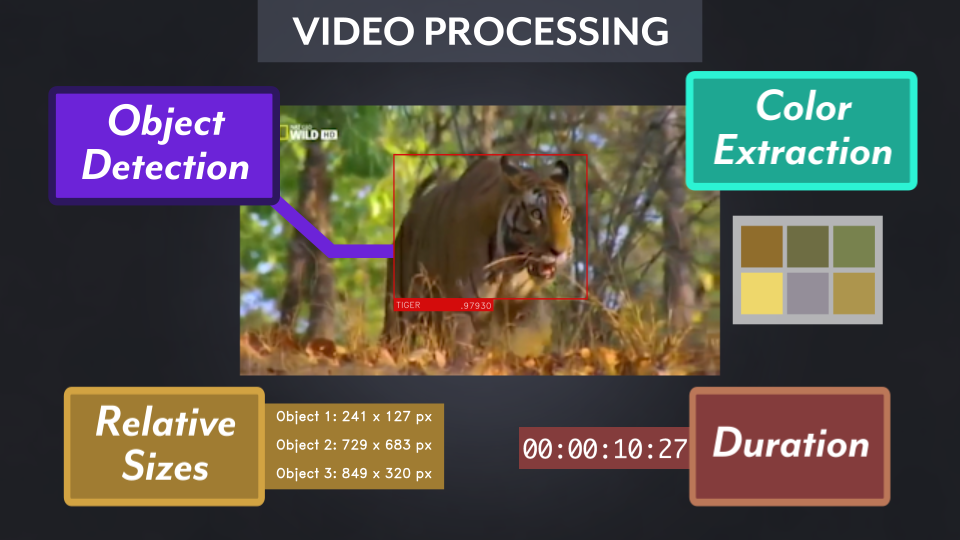

Step 2 - Video Processing

The next step was to figure out what objects were in the videos, what they looked like, and how they moved during scenes. We processed our data into a standardized .fvid format to gather the video metadata.

We compiled documentary clips featuring different animals and used custom object detection to track the animals that we were interested in (in our case, tigers, polar bears, dolphins, and eagles). We also generated color metadata for each frame so that when we want to create a video for a user later, we can pull clips based on both color and object similarity.

Object Detection

We used ImageAI to train a custom object detection model, built with transfer learning on top of the YOLO v3 model.

Color Information

We extracted RGB values for each frame of video. During subsequent viewing, a Wasserstein distance is called to compare frames based on color information, searching for the closest video in .fvid space.

Object Tracking

We used a centroid tracker to smooth out object motion. The centroid tracker finds the center of an object in one frame, then compares it to the location of the center of the object in the next frame. It uses this distance to follow an object through the video, and also determine when a new object has entered the frame.

Step 3 - Real Time Eye Tracking

The final step involved an actual viewer. Using the Pupil Core eye tracker, we were able to collect real time pupil and fixation information from a viewer.

The eye tracker has three cameras: a scene camera and two infrared cameras. The scene camera faces out towards the world, while the infrared cameras faces inward towards the wearer’s eyes. It uses NSLR-HMM eye movement classification algorithm to calculate eye movements. More information about the specifics of the Pupil Labs eye tracker can be found here.

As the final piece of our pipeline, we first showed the viewer two short clips of each animal. During this initial exposure phase, the model combined information about what’s happening in the video and what the viewer’s eye movements look like.

The model used this information to create a ranked queue of videos to show next. Once the exposure phase was done, the first video in the queue (which the model thinks will be most interesting to the viewer and most visually similar to the last clip) was presented. The model continued collecting information and updating the queue. Over time, someone’s interest may wane and the model takes this into account and adjusts the queue accordingly.

Viewer Experience

So how does this all look to the viewer? So much is happening in the background, but the viewer simply puts on the eye-tracker and watches a video. They can’t even tell where the “data collection video” ends and the “predicted videos” begins. There's no need to wait to click on the next recommended video.

Our pipeline allows seamless transition between videos that will maintain a viewers attention, and for an indefinite amount of time, since our data collection and modeling is real-time. Even while a viewer is watching a predicted video, the model is still gathering data and updating so that it knows what the viewers wants to see. Sick of tiger videos? Let’s switch to a polar bear fight. Too much action? How about a nice peaceful dolphin swim. As the viewers continues watching, the model gets even more accurate at making predictions. To the viewer though, it just looks like one video.

Future Applications

The process we have described so far has more applications than just making an endless stream of dolphin videos.

Virtual Reality-based games require massive amounts of processing power to render realistic scenes that can adapt to a player. This process could be simplified by only rendering what a player is actually attending to. Furthermore, the predictive power of our model would give the game a good idea of what a player will look at next, allowing it to avoid rendering resource-heavy objects if it knows the gamer seems to be interested in only certain things.

The advertising process, presently, relies on talented creative and editing teams or expensive user testing for the best results. Using our pipeline, advertising companies can include focus groups in the creative process more directly, creating variations of videos that specifically appeal to individuals and demographics on-the-fly. Furthermore, advertising content can be presented accounting for attention and relevant objects present on displays. This allows less distracted viewing of content and greater retention.

Content-creators, whether small independent YouTubers or network TV channels, all want to know - what do people want to see? Project Fovea can generate personalized videos for each viewer. These videos edit themselves and constantly update based on viewer interest. Most smartphones and webcams can easily collect eye tracking data, so at-home viewers can opt-in to get a personalized video experience.

The future of learning is online. MOOC, distance learning programs, even workplace training videos. Using our real-time data collection, instructors could quickly verify that people are actually watching and paying attention to the video content. If they find that people’s attention is slipping, our pipeline can easily present a different type of video to get someone back on track when our attentional measures predict they’ve lost interest (or are about to).

Project Fovea is an innovative collision of worlds: we’ve taken methodology from the cognitive science and computer science fields and combined them to function in the creative process. We’ve taken information gleaned from a video and combined it with measures of viewer engagement to create a powerful predictive model that knows what a viewer wants, maybe even before they do. This project has multiple applications within the video production field and beyond.

About Us

Fractal Media is a boutique video agency based in NYC that works at the intersection of creativity and technology. In addition to full-service video production, we conduct research in computer science and psychology to leverage state-of-the-art tools for storytelling. Our researchers are also affiliated with Columbia University and CUNY Grad.

Over the past year, our advertising and original content in science and technology has received over 3 million views online and coverage from Slate, NPR, Discovery Channel and more.

Project Fovea involves the integration of eye tracking and video production with the goal of understanding how attention can be used in the creation process. We will be seeking funding over the next year to explore the use of our framework in video editing and content presentation in AR/VR.